joblib.dump¶

- joblib.dump(value, filename, compress=0, protocol=None, cache_size=None)¶

Persist an arbitrary Python object into one file.

Read more in the User Guide.

- Parameters

- value: any Python object

The object to store to disk.

- filename: str, pathlib.Path, or file object.

The file object or path of the file in which it is to be stored. The compression method corresponding to one of the supported filename extensions (‘.z’, ‘.gz’, ‘.bz2’, ‘.xz’ or ‘.lzma’) will be used automatically.

- compress: int from 0 to 9 or bool or 2-tuple, optional

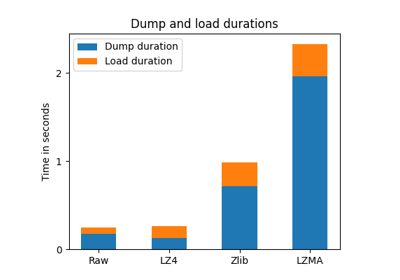

Optional compression level for the data. 0 or False is no compression. Higher value means more compression, but also slower read and write times. Using a value of 3 is often a good compromise. See the notes for more details. If compress is True, the compression level used is 3. If compress is a 2-tuple, the first element must correspond to a string between supported compressors (e.g ‘zlib’, ‘gzip’, ‘bz2’, ‘lzma’ ‘xz’), the second element must be an integer from 0 to 9, corresponding to the compression level.

- protocol: int, optional

Pickle protocol, see pickle.dump documentation for more details.

- cache_size: positive int, optional

This option is deprecated in 0.10 and has no effect.

- Returns

- filenames: list of strings

The list of file names in which the data is stored. If compress is false, each array is stored in a different file.

See also

joblib.loadcorresponding loader

Notes

Memmapping on load cannot be used for compressed files. Thus using compression can significantly slow down loading. In addition, compressed files take up extra memory during dump and load.